In the recent past, I stumbled across a paper entitled “The evolvability of programmable hardware”. I was, as I’m sure would be the case for anyone, immediately fascinated.

This, then, is the account of that paper, dear reader. But a word of background, perhaps, before we dive in.

Biological systems are known for their ability to tolerate faults, caused either externally (hostile environments) or internally (mutations). Indeed, their ability to withstand mutations is a major component of their ability to evolve.

Manmade systems, on the other hand, are less robust - change or remove something, and they tend to fall over. This limits their ability to gain new, useful adaptations (through random change, that is, rather than direct design). Of course, it’s something which many people have been tackling since the early 90s at least.

So, how does the squishy biological stuff do it?

Certain properties are needed for this level of biological robustness - proteins are useful examples. Proteins are built by assembling amino acids into long strings, like pearls. The order of these amino acids then causes the protein to fold into a shape, which in turn determines its function.

There are 22 standard amino acids, of which we’re able to make 10. The ‘recipes’ for these 10 are, of course, encoded in our DNA. However, it takes 3 DNA base pairs to code for one amino acid. Since there is a higher number of possible permutations of a 3 bp sequence than there are amino acids coded for, you can image that each amino acid has multiple codes. This is important - it means minor mutations in a DNA sequence might, or might not, induce a change in the amino acid sequence.

Further, even changes in that sequence might or might not induce a change in the protein’s structure, and therefore its function. Hence robustness. But also, the ability to change.

So now, the question that needs to be asked is whether programmable hardware - in this case, a certain class of electronic circuits - can be designed to have the same ability, or whether there are intrinsic differences in biological vs technological organisation that would preclude it1.

As with biological systems, technological systems are also subject to 2 forms of change - external/environmental and internal component changes. This paper focuses on the internal, because of biological counterparts’ ability to get to innovation through internal changes.

“Fault-tolerant” systems are not new. Neither is the design of circuitry which is able to adapt and evolve its function. And, of course, there’s the use of evolutionary algorithms2 to solve very complex problems. In evolvable hardware, the same principles - reproduction, mutation and selection - are used in electronics. Often, field-programmable gate arrays (FPGAs) are used.

What are FPGAs? Simply put, they’re silicon based lifeforms logic circuits, built from transistors. What it’s important to realise, is that both the functions computed by each of the logic gates assembled in an array in the circuits, and the connection of these gates, can be altered. Hence “field-programmable”.

FPGAs are used in image processing, digital sign processing and high-performance computing applications such as fast Fourier transforms. Basically, they’re commercially valuable. Which is one of the reasons why they’re the subject of the paper.

So, to the experiment itself:

Each circuit, (which computed one function each) has a specific structure, made up of the identity of, and connections between, its logic gates. As in biology, let us call this its genotype. Further, this structure denotes its function - its phenotype. Each circuit can be mapped to one function.

And the questions to be asked? I’m going to quote the authors here…

“How ‘robust’ is a typical circuit to changes in the wiring/configuration? Do neutral networks exist in this configuration space? Can circuits with significantly different configuration compute the same function? Does the organization of circuit space facilitate or hinder the adoption of novel phenotypes (logic function computations) through small numbers of gate changes?”

Basically: “if we poke it with a stick, what will it do?” [Yes, I know we’re all thinking about those snails, but please, try to remain focused on the research at hand]

For the most part, they look at circuits with 4×4 nodes, although they did also look at 3×3 and 6×6 to see the how circuit size might affect their findings. Think of all the possible permutations in structure and function of these circuits as inhabiting a phase space we’ll call a “circuit space”. And woohoo, but it’s a big one. Lots of zeroes. Circuits are ‘neighbours’ if their structure is only minimally different. That is, they live close to each other in circuit space. It’s worth reminding you, dear reader, that small changes in structure might, or might not, mean a change in function. As with changes in DNA or amino acid sequence.

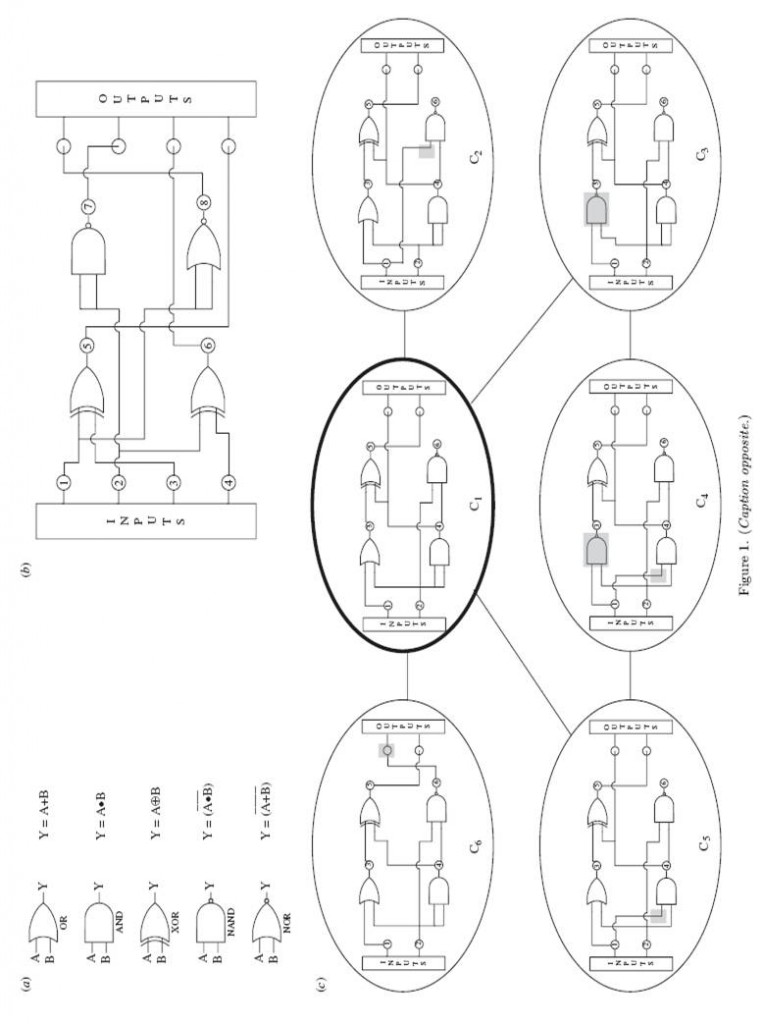

(a) Shows the standard symbols for logic gates along with the functions they represent. (b) Shows an example of a digital logic circuit comprising four (2 x 2) gates, akin to a field-programmable gate array. The circuit comprises four logic gates, represented by the symbols shown in (a). Each of the gates has two inputs and one output. The entire array has nI ¼ 4 input ports and nO ¼ 4 output ports; the array maps a Boolean function having four input variables to four output variables. The connections between the various columns or ‘levels’ in the array are ‘feed-forward’; i.e. the inputs to each element in a column of the array can come only from the outputs of any of the elements from previous columns. There are four outputs from the array, which can be mapped to any of the four gate outputs. (c) Illustrates the concept of neighbours in circuit space. The panel shows six circuits with 2 x 2 logic gates and two inputs and two outputs per circuit. The figure shows a circuit C1 (thick ellipse) and some of its neighbours in circuit space, that is circuits that differ from it in one of the four possible kinds of elementary circuit change. For example, C2 differs from C1 in internal wiring, C3 differs in the logic function computed by one of the four gates, C5 differs in an input mapping to one of the gates and C6, which differs in the output mapping. The circuit C4 differs from C1 in two elementary changes and is therefore not its neighbour in circuit space; however, it is a neighbour of C3 and C5. The differences between C1 and the other circuits are shown by shaded grey boxes. [Taken from paper; click on picture for bigger version]

Right, ok, what did they actually do?

Well, they built a simulation. Obviously. Because no one, no matter how obsessive they are, wants to sit and built squillions of circuits. To be slightly more specific, they built a vector representation, allowing them to easily compute the ‘distance’ between circuits (how difference the output bits are to each other) which would denote a change in the ‘wiring’ of the FPGA. They then choose a bunch of different permutations for input mappings, gate configurations and output mappings.

And they analysed 1,000 circuits. To see how robust a circuit was, they caused one of its gates to ‘fail’, and watched what happened.

On to the findings!

(Or, the bit where people ask “right, so we know what you did, and have survived your interminable introduction, but why should we care?)

Interestingly, they noticed that a small number of functions were computed by a large number of circuits, and vice versa.

Also, circuits computing any one function are linked in ‘neutral networks’ across this circuit space. Basically, you can move from one circuit computing function x to another computing function x, simply by making a small change (but one which, obviously, preserves its function. Just like what happens with proteins). Of course, some functions have larger neutral networks than others. The larger the neutral network, the more robust the circuits are to changes.

As circuits wander through their neutral networks, they will encounter different things. Some of their neighbours will have novel functions. Some will have the same function. Of course, as they wander further from their original position out into their neutral networks, they are more likely to encounter other circuits with novel functions. All of which makes good, intuitive sense as well (think about what happens when you travel).

In essence, it shows that yes, electronic circuits can be designed which mimic biological robustness. But this paper does quite a bit more. Previous work has shown that specific circuit functions can be designed to be fault-tolerant. For example, people have forced circuits to ‘learn’ how to better and better tolerate random gate failures and noise3.

What makes this paper interesting is that it’s not specific. It shows that this ability to be fault-tolerant applies to many different types of circuits and functions, and that for each function, there will be circuits that are significantly more or less robust than others, without even needing things like redundant gates.

In other words - a more fault-tolerant circuit doesn’t necessarily need to be more complex. In our increasingly energy-aware times, this is good. Also, evolutionary algorithms (or other such brilliant things) can be used to find these uber-circuits.

Also: sometimes, one wants a circuit which can easily change to computing a new function. Like in modular robots, which we use for deep sea mining, space exploration, search and rescue, and other environments hostile to squishy, meaty4 lifeforms such as ourselves. In such instances, the ability of said robots to reconfigure their circuitry, for example for navigation, could be very handy. It means the robots could learn5. For this, it’s particularly useful that neighbours with novel functions exist in circuit space – to put it more simply, if you’re trying to redesign your circuitry to do something new, it would be nice to do so having to make as few changes as possible.

Hell, it even means self-repairing circuitry could be designed, even if some parts fail!

So, what are the costs of reconfiguration? Well, time, really, and reconfig data storage space, and of course, both depend on how much reconfig work is needed. Also, if one designs everything as the authors did, so that only minimal (partial) reconfig is needed, then everything can go on working, rather than everyone having to stop to move around. Sort of like closing one lane on a road, as opposed to closing a whole tunnel6 road.

Another general observation? The more complex a circuit (i.e. the more gates it has), the more robust it is. This makes sense. It’s why we can survive many changes to our genome and be just fine, whereas, for example, viruses can suffer severe loss of function if their genome changes much.

Also, the more complex the circuit, the larger its neutral network in circuit space. And there will be some functions that little circuits can’t compute. (I have an image in my head of a small person being asked to do some outrageous piece of multiple division, heh).

An example, from the authors:

“A case in point is again the space of four-gate circuits. This space comprises 4.67 x 108 circuits. These circuits can compute only 4.05 x 106 different functions, a small fraction of the possible 1.8 x 1019 Boolean functions with four inputs and four outputs…These considerations show that robustness and evolvability of programmable hardware have a price: increasing system complexity.”

Finally - as in biology, so again tech: there are some components which aren’t used directly for the computation/function, but are nonetheless important. They allow extra variability, and are key in the ability to develop new functions. It’s also why very simple/small circuits may not be as ideal, even though they are simple and elegant – their pared-down nature means they don’t have these extra bits.

There are limitations, though, to the research: each silicon chip has minute differences, and external factors such as temperatire DO play a role. And a computer simulation is, axiomatically, not the same thing as real life. And the authors aren’t sure what would happen if you scaled up to circuits containing thousands/millions of logic gates. And, of course, to get around the mahoosive numbers involved anyway, it was necessary to perform sampling, rather than measuring everything.

So, there you have it. It’s possible to design fault-tolerant, adaptive electronic circuitry. Bring on our silicon-based overlords.

Disclaimer: until I read this, I had no idea what FPGAs are. Or logic gates. I am, of course, immensely ashamed. However, it is again an example of how judicious use of search engines, and some curiosity, can bring wonderful things. (Yes, this is aimed at people who are too lazy to look things up sometimes)

Also: you think this is long? You should see the original paper…

—————————-

1 Earlier this year, some fascinating research was published which explained why E. coli bacteria crash less often than Linux. Seriously.

2 Evolutionary algorithms. Sigh. These are one of the reasons I began a genetics degree in the first place. Algorithms behaving like little, sexually reproducing beasties. It’s delicious.

3 Watch out for my upcoming post on the Creativity Machine

4 Bring on bionics

5 That’s a good thing

6 Wellingtonians will understand…

References:

UPDATE: It’s online, finally!

Raman, K., & Wagner, A. (2010). The evolvability of programmable hardware Journal of The Royal Society Interface DOI: 10.1098/rsif.2010.0212

![]()