UPDATE: Please see the end of this piece, where I give examples of possible ‘good’ metrics

The subject of innovation/science/tech/R&D, and its contribution to a country’s economy and society, appears to be top of mind for policymakers, funding bodies and scientific bodies all over the world.

In New Zealand alone, MSI has set a target of doubling the value of science and innovation for New Zealand. Of course, this begs the question - how would one even begin measure that?

Enter the very complex, very fraught, and very interesting world of science metrics. Metrics are used in all policy domains to help policymakers make decisions, and many fields have well-established, useful metrics.

Not so science and science policy, where investment decisions are made without sound, meaningful data and metrics which encompass the full value created by science.

Yesterday, I was privileged to attend a lecture at MSI by renowned economist Dr Julia Lane, on loan briefly from the US. Titled ‘Measuring the value of science‘, she discussed exactly this issue.

Amongst other achievements, Julia developed and led NSF’s Science of Science & Innovation Policy (SciSIP) program, which was begun after policymakers in the US realised that they had ‘no idea how to make science investments’ (in an evidence-led fashion), and a clarion call from Jack Marburger (1) for better science benchmarks (metrics).

Julia talked for almost an hour and a half, and there’s a tonne of additional reading material on this subject, so I’m not going to attempt to summarise it all. Instead, I’m just going to pull out some of the main points of her lecture, and comment where I think appropriate. I’ll reference where I can, and you’ll find further reading links at the bottom of this post, too.

The challenge

The current data infrastructure is inadequate for making sound, evidence-based decisions about science, science investment and so forth. At the moment, science funding is something akin to a dark art, based on anecdote or poor metrics (eg. bibliometrics, but more on that shortly) rather than sound, empirical data and metrics.

We also don’t understand how science investment and its products interact with other aspects of societies and mechanisms. It’s one thing to wave one’s hands and say ‘a miracle happened’, but quite another to actually track how Grant X rippled through society, providing both economic and other benefits (see ‘Further notes’ at end), over a time period of years to decades.

Part of the difficulty here is that the relationship of science to innovation is non-linear, and its inputs and outputs/outcomes are extremely complex, over hugely varying time frames, amongst very complicated networks of people (from teams to organisations to political systems both national and international). There’s also the challenge of conveying the results of any analysis of all of this to the public and to policymakers. Hell, even terms such as science, technology, innovation and R&D have different definitions depending on who you speak to.

Current metrics

There are a range of metrics used, of which probably the most well-known and widely-used are bibliometrics. These look at citations, the publications produced by a researcher, and the journals in which said publications were, well, published. Ask most researchers how they feel about their entire corpus being measured this narrowly, and the dreaded Journal Impact Factor, and you’ll likely be treated to a groan and some variation on * headdesk * (or the FFFFUUUUU meme).

And that’s the thing - bibliometrics weren’t developed to measure science. They were developed to help us understand the corpus of publications (certainly very useful), but are terrible for measuring science as a whole. They’re slow, narrow, secretive, open to gaming, and have a host of other issues (I’ll not go into that, as that’s the subject of lots of research out there. Just go have a look).

Herein a cautionary tale about metrics: what you measure is what you get.

A lesson learned by Heinz, by Sears, by Dun & Bradstreet, and no doubt many more, is this: what you measure, and how, influences what is produced. Think of it as the observer effect writ large.

And so it is with measuring science using bibliometrics. Recent research has showed that falsification, fraud and misconduct in scientific publishing is on the increase, while other research has suggested that the situation is far, far worse (2), (3). And all because of something very simple - we’ve created perverse incentives. Rather than rewarding researchers for the creation and transmission of new scientific ideas and knowledge, we’re rewarding them for citations, producing published papers and, often, poor-quality research.

Further, the world’s moved on - published papers are no longer the only activities which support the creation and transmission of scientific ideas and knowledge. Other, more modern approaches include blogs, mentoring, prototyping, perhaps even the production of YouTube videos (4), training students, and so on.

Burden

The current system also places an enormous burden on researchers, who spend vast proportions of their time (42% of the time of PIs (Principal Investigators, or lead scientists) in the US, for example (5) on administrative tasks.

To make matters worse, it’s work for which they’re not trained, at which they’re crap, which they hate, and which wastes very valuable time which could actually be spent on, well, the research for which they’re being paid.

How to even being fixing this?

It’s not an easy task. Mammoth doesn’t even begin to describe it. But there ARE ways which Julia and her colleagues have been working on which can address this.

Of this, there are two particularly important cornerstones.

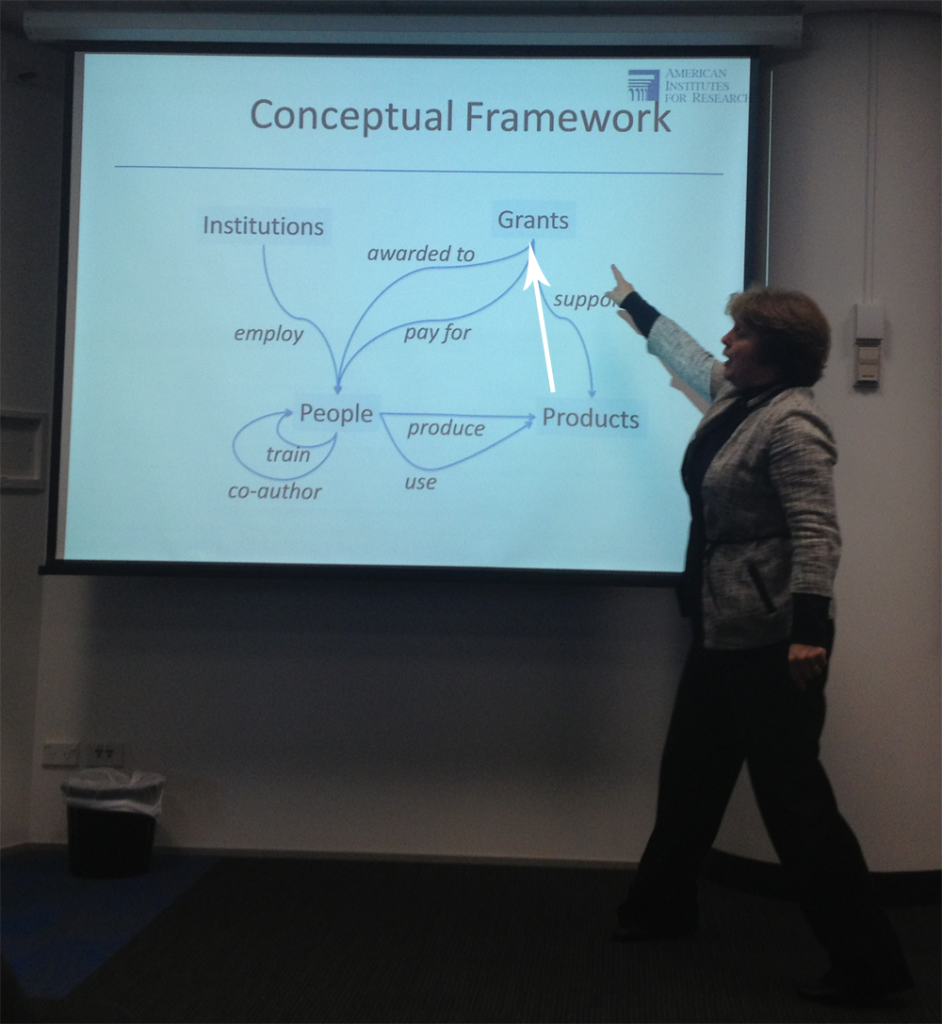

The first is the development of an intellectually coherent framework for understanding how people, grants, institutions and scientific ‘products’ influence each other.

Of key importance here is the realisation that science is done by people, so the core of any framework should have human beings. Not citations, note. Or grants. Or anything else that couldn’t also be called a meat popsicle.

Grants are the intervention, and funding (and measurement) is what affects the behaviour of the researchers and how networks are formed, grow and survive. The interest should be in describing the products (a range of different sorts of products, from publications to lives saved) that they produce, which could be YEARS after the initial intervention.

This is a longitudinal, interactive process. And of course, institutions form context, as their facilities, cultures and structures play in to what can be done.

The second is that a reliable, joined-up data infrastructure (DI) will be necessary* to provide all the information the framework has indicated is important in the measurement of science and scientific outcomes/products.

Key characteristics of the infrastructure are that it should be:

- Timely (i.e. information is up to date)

- Generalizable and replicable

- The underlying source data should be OPEN and generally AVAILABLE, not “black box”. This also allows it be be reused for different purposes. It also means it can generalised, replicated and validated, and can therefore be seen as actually useful.

- Oh yeah, also - open means it can be continually improved by the community. A Good Thing :)

- Low cost, high quality, and reducing the burden of reporting on the scientific community

Here, thankfully, technology is on our side. As are all sorts of researchers in social science, economics, computer science, and so forth, all of whom are keen to develop something, internationally, which works :)

Some (there are more!) of the sorts of technology we could use to build this sort of DI include:

- Visualisation

- Topic modelling, graph theory, etc.

- For example, topic modelling can be used to better understand which scientific topics (by looking at papers, reports, grant applications etc) occur in groups, not only bloody useful in and of itself, but also great for things like apportioning where grant funding went.

- Social networks

- Automatic scraping of databases (so PIs etc don’t need to waste time on manual reporting of their work, outputs, its impact, and other such tiresome and time-consuming tasks)

- Big Data (in this context)

- Disambiguated data on individuals

- Automatic data creation (just have researchers VALIDATE it, not create it)

- New text mining techniques

Even better, all of these lovely outputs can be pulled together, as Julia and co have done, into an API. Which is open, and allows any individual/organisation/funding body/etc to pull out and slice and/or aggregate all of the information in whichever ways make sense for them. Networks of science. Publications and patents by region. How a grant ended in the education of students, and their forward progress in science. Anything you can dream of, really, as long as the data’s there.

Of course, there is a problem here, and no doubt sharp readers will have noticed it.

The data itself.

There’s data all over the place, but it’s fragmented and isn’t always open, accessible or of useful quality. Examples include HR databases (organisations), patents (patent offices), publications (journals), publications/presentations (organisational libraries) grants (funding bodies), online profile databases for CVs and so on. The systems used to keep the data may be proprietary, meaning they don’t talk to anything else. Their metrics may be different, again making it different to merge them. All SORTS of problems abound.

But they’re fixable, as systems such as Brazil’s decade-plus-old Lattes system show - one of the cleanest databases on earth, it contains records for over a million researchers, in over 4,000 institutions in Brazil.

There are challenges, certainly, but funding, willpower and buy-in from funders and researchers means databases can be opened up, cleaned up, and made interoperable. It’s the whole POINT of federated data infrastructure work :)

Other examples where this sort of work is being done include STAR METRICS, R&D Dashboard, COMETS, Patent Network Dataverse, and others still in development.

And so?

A fair question. So, a system is built. It takes lots of lovely data and puts it together, allowing one to track all sorts of things. Where a grant’s money goes. How it ripples out through society in the form of training for students, publications, public science communication, patents and links with business, commercialisation and more.

But how does that actually help a bunch of people sitting around a table trying to decide which projects to fund? Or how to demonstrate that a given piece of science has value?

Well, there’s no easy answer. Each group will be looking for different characteristics and outcomes. They’ll have, in other words, different criteria fo measuring the worth of a project and its projected value.

But any such group won’t be trying to assemble relevant information from scratch, from piece-meal sources which may be distorting.

Instead, they’ll have the information they need to develop real, scientific metrics. Which will help us all to develop a system which fosters the creation and transmission of knowledge, of real, GOOD science.

‘Tis a consummation devoutly to be wished. ***

And thank you, Julia. You inspired me. I’m so thrilled so see that there are people out there applying sense to science measurement, and an understanding and love of of the subject matter :)

—-

An opportunity for NZ

The US, while having oodles more money than NZ, is also very big, very complicated, and still hasn’t properly cracked this yet. I think NZ government, policymakers, and science bodies should see this as an opportunity - we’re smaller, less complicated, and there’s no shortage of people here and overseas who’re willing to help and share expertise. We already have systems like Koha, and researchers like Shaun Hendy (who looks at innovation networks in NZ, amongst other things), and all of those who attend conferences such as Living Data.

I’m sorry. I know this one was long :) And I _still_ feel like I left a tonne out, sigh.

—-

Further thoughts:

On the subject of government funding of science, Julia had some choice things to say. Firstly, and more widely discussed in a blog post by John Pickering, was that government should not be funding research which has a strong economic return. That is the business of private enterprise. Rather, government should be funding work which has strong public value, if not (possibly not yet) strong direct dollar value. Such as ARPANET in the US. Additionally, some basic research WILL fail, and it’s good to let people know about that - it’s of value to both industry and science, to prevent people reinventing circular objects.**

Julia was also quite vehement about the fact that any government (which should fund a mix of basic and applied science) which expects short-term economic results, especially to some sort of dollar value, is missing the point. Government and policy makers shouldn’t be interested in short-term return on science investment, but rather in which areas are growing nationally and internationally, and what’s happening generally. Of course, the identification of ‘hot’ areas is interesting, as it’s something of an endogenous process (more funding in an area makes it hotter, in many cases, while hotter areas get more funding. Hello positive feedback loop).

Additionally, science does not only have direct economic benefits, and any attempt to quantify it purely in those terms may well underestimate science’s true value. Instead, its public value should also be taken into account. These outcomes are public, nonsubstitutable and oriented to future generations, and capture elements such as competitiveness, equity, security, infrastructure and environment (6).

As to whether you can know for sure something’s not a bubble? You can never know if something’s a bubble, or just about to revive after looking dead (eg. graph theory). Any time you make a decision you may be wrong, but you as well make it with SOME evidence, which is what this framework and DI are designed for.

Examples of better metrics

/waiting on permission to show something here. Keep an eye out for updates. UPDATE: Examples shown below.

—-

References and further reading

(1) Marburger, J. (2012). Wanted: Better Benchmarks. Science, 2012. Science 20 May 2005: 308 (5725), 1087. doi:10.1126/science.1114801

(2) Ioannidis, J. P. A. (2005). Why Most Published Research Findings Are False. PLoS Med, 2(8), e124. doi:10.1371/journal.pmed.0020124

(3) Young, S., & Karr, A. (2011). Deming, data and observational studies. Significance, 8(3), 116–120. doi:10.1111/j.1740-9713.2011.00506.x

(4) Lane, J. (2010). Let’s make science metrics more scientific. Nature 464, 488-489 (25 March 2010). doi:10.1038/464488a

(5) Lane, J., Bertuzzi, S. (2011). Measuring the results of science investments. Science 11 February 2011: 331 (6018), 678-680. doi:10.1126/science.1201865

(6) Lane, J. (2009). Assessing the impact of science funding. Science 5 June 2009: 324 (5932), 1273-1275. doi:10.1126/science.1175335

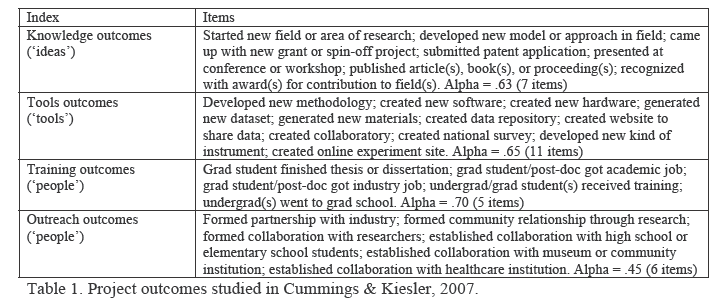

(7) Kiesler, S., & Cummings, J. (2007). Modeling Productive Climates for Virtual Research Collaborations. Cummings, J., Kiesler, S. (2007). Coordination costs and project outcomes in multi-university collaborations. Research Policy 2007;36(10):1620-1634. Saw table in as yet unpublished chapter of “Next generation metrics: harnessing multidimensional indicators of scholarly performance“, edited by Blaise Cronin and Cassidy Sugimoto, MIT Press

See also:

The Science of Science Policy: A Federal Research Roadmap.

TED - Derek Sivers: How to Start a Movement

Science and the economy: Julia Lane on Radio New Zealand’s Nine to Noon programme, 10 Oct 2012

—-

* This year seems to be The Year I Hear A Lot About Federated Data Infrastructure Systems (did I mentioned I went to the awesome Living Data conference?) :P

** There’s a growing movement to divorce publication of papers from publication of results. The publication system is biased towards papers with positive results, not negative. Publishing ALL results, and openly, would mean everyone had access to said results, and would again save a HUGE amount of wasted time as people unknowingly repeat each others’ research. It also means advances could be made much more quickly, by the secondary analyses of different sets of data.

*** Consummation as in end. As in result. Not death. Although, of course, anything of this sort will be iterative. Poetic license, m’kay?! :P

Pingback: Engaging early- and mid-career scientists | misc.ience()